EntraID MSGraph Powershell Set or Query Device extensionAttributes

I was recently tasked with using the EntraID SDK (think get-mgDevice and update-MgDevice) to query, clear, or update any one of the 15 extensionAttributes which are available for Azure / EntraID devices.

Devices. Not users. There were lots of examples out there on setting attributes for users in EntraID, but the examples for devices were sparse, or no longer valid.

In case someone is tasked with doing something similar in the future, as of February, 2024... this "worked for me". Obviously the account running this needs to be able to affect devices (like Devices.ReadWrite.All) or similar permissions and scope on your tenant.

See the code below.

How to run the script:

Pre-requisites: hard code in your $ClientID, $ClientSecret, and $TenantId (or add them to the parameter line when you call the script)

Example:

To Populate if null or Modify extensionAttribute7

ThisScript.ps1 -ComputerName 'SomeComputerInAzure' -ExtensionID 'extensionAttribute7' -ExtensionValue 'Hawaii' -Action 'Set'

To clear extensionAttribute7

ThisScript.ps1 -ComputerName 'SomeComputerInAzure' -ExtensionID 'extensionAttribute7' -ExtensionValue '' -Action 'Set'

To query extensionAttribute7 for a device

ThisScript.ps1 -ComputerName 'SomeComputerInAzure' -ExtensionID 'extensionAttribute7' -Action 'Query'

What does the script actually "do"

- NuGet if not already there

- Import the module Microsoft.Graph.DeviceManagement if not already imported.

- Connect to msgraph with your credentials for your client and tenant

- Build the $Attributes variable you will need to Set or Clear (if that is what you are going to do later)

- Find the Device in azure (fail if not found by the name you passed in)

- If found, and you said "Set", set the attribute you said to the value you said.

- If found, and you said "Query", ask EntraID for the value of that extensionAttribute[x]

Script:

<# .SYNOPSIS Using MSGraph, connect to your Azure Tenant, and set (or remove) any one of the 15 'extensionAttribute' for a Device. .DESCRIPTION With Paramters for ComputerName, extensionAttribute, and Value, set or remove a value of that extensionAttribute, only for Devices (not users) .NOTES 2024-02-06 Sherry Kissinger Code lovingly stolen from multiple sources, like Christian Smit, and sources like these:https://office365itpros.com/2022/09/06/entra-id-registered-devices/, https://www.michev.info/blog/post/3472/configuring-extension-attributes-for-devices-in-azure-ad 2024-02-08 Sherry Kissinger and Benjamin Reynolds Get the -Action 'Query' part to work successfully. Thanks Benjamin!!! Examples: To Populate if null or Modify extensionAttribute7 ThisScript.ps1 -ComputerName 'SomeComputerInAzure' -ExtensionID 'extensionAttribute7' -ExtensionValue 'Hawaii' -Action 'Set' To clear extensionAttribute7 ThisScript.ps1 -ComputerName 'SomeComputerInAzure' -ExtensionID 'extensionAttribute7' -ExtensionValue '' -Action 'Set' To list extensionAttributes for a device (Does not work...) ThisScript.ps1 -ComputerName 'SomeComputerInAzure' -ExtensionID 'extensionAttribute7' -ExtensionValue '' -Action 'Query'#>param ( [string]$ClientID = "12345678-1234-1234-1234-12345678901234", [string]$ClientSecret = "SuperSecretSecretySecretHere", [string]$TenantId = "87654321-4321-4321-4321-210987654321", [Parameter(Mandatory)][string]$ComputerName, [Parameter(Mandatory)][ValidateSet("extensionAttribute1","extensionAttribute2","extensionAttribute3","extensionAttribute4","extensionAttribute5","extensionAttribute6","extensionAttribute7","extensionAttribute8","extensionAttribute9","extensionAttribute10","extensionAttribute11","extensionAttribute12","extensionAttribute13","extensionAttribute14","extensionAttribute15", IgnoreCase=$false)][string]$ExtensionID, [AllowNull()][AllowEmptyCollection()][string]$ExtensionValue, [Parameter(Mandatory)][string][ValidateSet("Query","Set")]$Action)## IMPORTING MODULES# Write-Host "Importing modules" # Get NuGet $provider = Get-PackageProvider NuGet -ErrorAction Ignore if (-not $provider) { Write-Host "Installing provider NuGet..." -NoNewline try { Find-PackageProvider -Name NuGet -ForceBootstrap -IncludeDependencies -Force -ErrorAction Stop Write-Host "Success" -ForegroundColor Green } catch { Write-Host "Failed" -ForegroundColor Red throw $_.Exception.Message return }}$module = Import-Module Microsoft.Graph.DeviceManagement -PassThru -ErrorAction Ignore if (-not $module) { Write-Host "Installing module Microsoft.Graph.DeviceManagement..." -NoNewline try { Install-Module Microsoft.Graph.DeviceManagement -Scope CurrentUser -Force -ErrorAction Stop Write-Host "Success" -ForegroundColor Green } catch { Write-Host "Failed" -ForegroundColor Red throw $_.Exception.Message return } }## CONNECT TO GRAPH#$credential = [PSCredential]::New($ClientID, ($ClientSecret | ConvertTo-SecureString -AsPlainText -Force))Connect-MgGraph -TenantId $TenantId -ClientSecretCredential $credential -verbose If ([string]::IsNullOrEmpty($ExtensionValue)){$Attributes = @{ "extensionAttributes" = @{ $ExtensionID = "" } }}else{$Attributes = @{ "extensionAttributes" = @{ $ExtensionID = $ExtensionValue } }}# COMPUTER ################################## Write-Host "Locating device in" -NoNewline Write-Host " Azure AD" -NoNewline -ForegroundColor Yellow Write-Host "..." -NoNewline try { $AADDevice = Get-MgDevice -Search "displayName:$ComputerName" -CountVariable CountVar -ConsistencyLevel eventual -ErrorAction Stop } catch { Write-Host "Fail" -ForegroundColor Red Write-Error "$($_.Exception.Message)" $LocateInAADFailure = $true } If ($LocateInAADFailure -ne $true) { If ($AADDevice.Count -eq 0) { Write-Host "Fail" -ForegroundColor Red Write-Warning "Device not found in Azure AD" } else { ForEach ($AADDeviceObj in $AADDevice) { Write-Host "Success" -ForegroundColor Green Write-Host " DisplayName: $($AADDeviceObj.DisplayName)" Write-Host " ObjectId: $($AADDeviceObj.Id)" Write-Host " DeviceId: $($AADDeviceObj.DeviceId)" Write-Host "..." -NoNewline if ($Action -eq 'Set') { try { Write-Host " Azure AD trying to add Value to extensionAttribute" -ForegroundColor Yellow $Result = update-MgDevice -DeviceId $($AADDeviceObj.Id) -BodyParameter $Attributes } catch { Write-Host "Fail" -ForegroundColor Red Write-Error "$($_.Exception.Message)" } } if ($Action -eq 'Query') { try { Write-Host " Azure AD query extensionAttributes" -ForegroundColor Yellow $TheIDValue = (Get-MgDevice -DeviceId $($AADDeviceObj.Id) -Property id,deviceId,displayName,extensionAttributes -ErrorAction Stop).AdditionalProperties.extensionAttributes.$ExtensionID Write-Host " $ExtensionID is: $TheIDValue" -ForegroundColor Yellow } catch { Write-Host "Fail" -ForegroundColor Red Write-Error "$($_.Exception.Message)" } } } } }

CM SQL Query for is LedBat Enabled on the Site Role Servers

I had the occasion to want to check the DP and SUP configurations for whether or not LEDBat was enabled for those roles, and yes... I absolutely could go look in the console, go to every server with a dp or sup role, right-click, and look. But... being me, I knew that information had to be in SQL somewhere, so I spent time looking for it. Could I have just done this manually, in less time? Likely. But... since I've spent the time creating the sql to look this up, this blog gets to have the results of my research. The one element I'm not sure of is that "Flags" apparently has "something to do with whether or not a DP role has this enabled". For me, if that value was a 4, that meant "yes", but since it IS a 'Flag' value, often that means that different values could have different meanings... but I couldn't find out what those meanings are. So... if you find in your environment that 'Flags' == 4 vs. not == 4 doesn't necessarily mean "yes, DP LedBat is enabled", I know that's certainly a possibility, and you may need to adjust the sql query to match your reality.

Anyway... for a starting point, here's the SQL I have so far.

selectLEFT(RIGHT(SUBSTRING(sru.NalPath,0,CHARINDEX(']',sru.NALPath)),LEN(SUBSTRING(sru.NalPath,0,CHARINDEX(']',sru.NALPath)))-12) ,LEN(RIGHT(SUBSTRING(sru.NalPath,0,CHARINDEX(']',sru.NALPath)),LEN(SUBSTRING(sru.NalPath,0,CHARINDEX(']',sru.NALPath)))-12))-2) as 'ServerName',case when srup.Name = 'Flags' then 'DP LedBat'else srup.Name end as 'Name',Casewhen srup.Name = 'Flags' and srup.Value3 = 4 then '1'when srup.Name = 'Flags' and srup.Value3 <> 4 then '0'when srup.Name = 'SUP LEDBAT' then cast(Value3 as varchar)when srup.Name = 'LocalDriveDOINC' then cast(Value1 as varchar)when srup.name = 'DiskSpaceDOINC' then Cast(value3 as varchar) + ' ' + Value1when srup.Name = 'RetainDOINCCache' then cast(value3 as varchar)End as 'Value',case when RoleTypeID = 3 then 'DPSetting'when RoleTypeID = 12 then 'SUPSetting' end as 'RoleType',roletypeidfrom SC_SysResUse_Property srup with (nolock)join sc_sysresuse sru with (nolock) on sru.id=srup.SysResUseIDwhere srup.name in ('SUP LEDBAT','Flags','DiskSpaceDOINC','LocalDriveDOINC','RetainDOINCCache')and roletypeID in (3,12)order by 'Servername',sru.roletypeid, srup.Name

and in my lab (with the one and only one server), this is the result. A SupRole only has a single result of yes/no (1/0).

For a DP, there is the 'enabled at all', then what might have been chosen for 'Local drive to be used' (LocalDriveDOINC), DiskSpace (DiskSpaceDOINC), and whether or not to retain cache when disabling the Connected Cache server (RetainDOINCCache).

ServerName Name Value RoleType roletypeid

HS5.b.b DiskSpaceDOINC 70 GB DPSetting 3

HS5.b.b DP LedBat 1 DPSetting 3

HS5.b.b LocalDriveDOINC Automatic DPSetting 3

HS5.b.b RetainDOINCCache 1 DPSetting 3

HS5.b.b SUP LEDBAT 1 SUPSetting 12

Another tip:

While interactively logged into the server, Get-Nettcpsetting -settingName InternetCustom | Select CongestionProvider should result in LEDBAT. Get-NetTransportFilter -SettingName InternetCustom should list the TCP Ports.

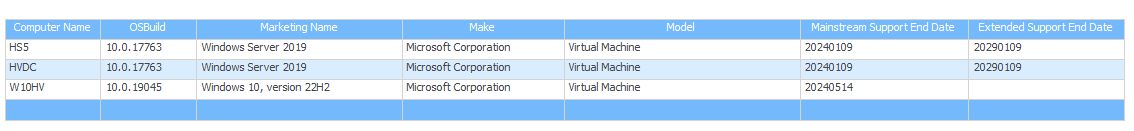

CM Display OS Marketing version in SQL reports

Have you ever noticed that by default, the devices (Windows 10, 11, Servers) don't report up their 'marketing name' in standard inventory? Like 22H2, or 21H2? Ever find that annoying? Sure, you can extend inventory to pull in the regkey of SOFTWARE\Microsoft\Windows NT\CurrentVersion\DisplayVersion, but... you don't have to. That information 'is' buried in the database, it's just not... super easy to find.

Here's a sample report to "Show ComputerName, OSBuild, MarketingName, Make, Model, SupportEndDate for the OS".

The gem, of course, is pulling out the MarketingName.

You could also do fun things like...

-only show records where MainstreamSupportEndDate < GetDate() (aka, stuff out of support)

-only show records where Manufacturer0 = 'Dell, Inc.'

etc etc etc...

Selects1.netbios_name0 as 'ComputerName',s1.Build01 as 'OSBuild',Case when CHARINDEX('(',ldg.groupName) >0 then rtrim(left(ldg.groupName, CHARINDEX('(',ldg.groupName) -1)) else ldg.groupName end as 'MarketingName',csys.Manufacturer0 as 'Make',csys.Model0 as 'Model',ldg.MainstreamSupportEndDate,ldg.ExtendedSupportEndDatefrom v_LifecycleDetectedGroups ldgjoin v_LifecycleDetectedResourceIdsByGroupName lr on lr.GroupName=ldg.GroupNamejoin v_r_system s1 on s1.ResourceID=lr.ResourceIDleft join v_GS_COMPUTER_SYSTEM csys on csys.ResourceID=s1.ResourceIDwhere ldg.Category in ('WinClient','WinServer')OPTION(USE HINT('FORCE_LEGACY_CARDINALITY_ESTIMATION'))

CM Icons for Applications, Packages, and Task Sequences

An important, but often neglected, feature of Software Center is to associate icons with the visible deployments in Software Center. You can add a visible icon to your Available things in Software Center: Applications, Packages(Advertisements), and Task Sequences (Advertisements... or OSD deployments).

A few 'things to know'

- Icons can be added to Applications, Packages (for Advertisements) and Task Sequences (for OSD or more complex deployments)

- "In general", icons in 512x512 format look the best. pictures or icons GREATER than 512x512 may not be able to be selected. That doesn't mean you can't use 256x256, or really, any .png as long as it is smaller than 512x512.

- "The file" known as "usethisPicture.png" doesn't get imported when you select it. What gets imported is a string which "represents" that image.

Where to 'get' icons to use.

- The already-installed application itself. For example, you can go to \\SomeWorkstation\c$\Program Files\TheApp\TheApp.exe, and there will usually be an icon that the vendor themselves decided represents that application.

- There are multiple 'icon repositories' out there, for example, iconarchive.com. Perhaps you are looking for "the perfect" AutoCAD icon. Search for AutoCad, and you will be presented with multiple (for free!) options. Select the one you like, download it. Now that you have the .png file, save it in a known location to use later.

- Image manipulation + online .ico converter. If there simply isn't ANYTHING you can find that you like at all, pre-created, you can screen shot something, edit it in a image editor, and/or web browse for something like "free icon converter", which will usually take an input file, and output a .png or .ico for you.

- "for fun" in %windir%\system32 is a file called 'moricons.dll' (yes, there is no e in that). It's a hold over from... wait for it... Windows 3.1! Select that file for some old skool nostagia.

How to associate icons with a..

Application

- Console, Software Library, Application Management, Applications

- Find the application, right-click and select 'Properties'

- Software Center tab, and near the bottom by "icon", Browse...

- Browse to where you identified the icon to use (program files on a workstation, a downloaded .png or .ico file...)

- Once selected, in the console you will see a representation of the icon, OK.

Package

- Console, Software Library, Application Management, Packages

- Find the Package, right-click and select 'Properties'

- Near the bottom by "icon", Browse...

- Browse to where you identified the icon to use (program files on a workstation, a downloaded .png or .ico file...)

- Once selected, in the console you will see a representation of the icon, OK.

Task Sequence

- Console, Software Library, Operating Systems, Task Sequences.

- Find the Task Sequence, right-click and select 'Properties'

- on the 'More Options' tab, near the bottom by "icon", Browse...

- Browse to where you identified the icon to use (program files on a workstation, a downloaded .png or .ico file...)

- Once selected, in the console you will see a representation of the icon, OK.

How to know what Apps, Packages, or Task sequences are missing an icon

So, that's all great that you can... but how do you know which ones are missing an icon, and/or what the icon looks like, without deploying 'everything' to yourself? Why, use a report of course!

Although it's not perfect (the sql doesn't filter by deployed AND visible in SC, just 'deployed', so if it is required and hidden from the user in SC, it will still show up on this report, even if you select @deployed=1), but if you want to see if something is 'missing an icon', if the Iconb column is blank... there is no icon defined.

--DECLARE @Deployed int = 1

IF @Deployed = 1

BEGIN

;WITH XMLNAMESPACES ( DEFAULT 'http://schemas.microsoft.com/SystemsCenterConfigurationManager/2009/06/14/Rules', 'http://schemas.microsoft.com/SystemCenterConfigurationManager/2009/AppMgmtDigest' as p1)

select

cci.SDMPackageDigest.value('(/p1:AppMgmtDigest/p1:Application/p1:DisplayInfo/p1:Info/p1:Title)[1]', 'nvarchar(max)') AS [Name]

,cci.SDMPackageDigest.value('(/p1:AppMgmtDigest/p1:Resources/p1:Icon/p1:Data)[1]', 'nvarchar(max)') AS [Iconb],

Type='Application'

,cci.CI_UniqueID as 'Identifier'

from vCI_ConfigurationItems cci

join v_CIAssignment cia on LEFT(cia.AssignmentName, CHARINDEX('_', cia.AssignmentName + '_') - 1)=cci.SDMPackageDigest.value('(/p1:AppMgmtDigest/p1:Application/p1:DisplayInfo/p1:Info/p1:Title)[1]', 'nvarchar(max)')

where citype_id=10

and cci.IsLatest=1

UNION ALL

select SMSPackages.Name,

Cast('' AS XML).value('xs:base64Binary(sql:column("SMSPackages.icon"))','varchar(MAX)')

,case when SMSpackages.Packagetype=0 then 'Package'

when SMSPackages.Packagetype=4 then 'Task Sequence'

else cast(SMSPackages.PackageType as varchar) end as 'Type'

,SMSPackages.PkgID as 'Identifier'

from SMSPackages

join v_DeploymentSummary ds on ds.PackageID=SMSPackages.PkgID

where smspackages.packagetype in (0,4)

END

If @Deployed = 0

BEGIN

;WITH XMLNAMESPACES ( DEFAULT 'http://schemas.microsoft.com/SystemsCenterConfigurationManager/2009/06/14/Rules', 'http://schemas.microsoft.com/SystemCenterConfigurationManager/2009/AppMgmtDigest' as p1)

select

cci.SDMPackageDigest.value('(/p1:AppMgmtDigest/p1:Application/p1:DisplayInfo/p1:Info/p1:Title)[1]', 'nvarchar(max)') AS [Name]

,cci.SDMPackageDigest.value('(/p1:AppMgmtDigest/p1:Resources/p1:Icon/p1:Data)[1]', 'nvarchar(max)') AS [Iconb],

Type='Application'

,cci.CI_UniqueID as 'Identifier'

from vCI_ConfigurationItems cci

where citype_id=10

and cci.IsLatest=1

UNION ALL

select SMSPackages.Name,

Cast('' AS XML).value('xs:base64Binary(sql:column("SMSPackages.icon"))','varchar(MAX)')

,case when SMSpackages.Packagetype=0 then 'Package'

when SMSPackages.Packagetype=4 then 'Task Sequence'

else cast(SMSPackages.PackageType as varchar) end as 'Type'

,SMSPackages.PkgID as 'Identifier'

from SMSPackages

where smspackages.packagetype in (0,4)

END

Now, let's say you've created a report in Report Builder, and added all the columns... but that iconb column..where everything starts with iVBORw... means nothing to your human eye.

How to make that look like a icon in Report Builder: Add a column, and for that square in the table, right-click, Insert, Image, Select "Database" as the image source. Now, select 'Iconb' for 'Use this field', and for the MIME Type, select image/png.

![]()